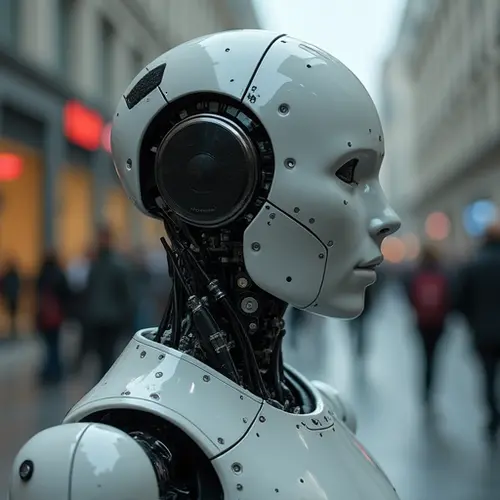

The Passport Question for Conscious Machines

As artificial intelligence advances at breakneck speed, lawmakers and ethicists are grappling with a once-unthinkable question: Should we grant legal identities to sentient robots? This debate gained urgency after recent demonstrations of advanced AI systems showing behaviors resembling self-awareness and emotional responses.

Missouri Draws First Line

Missouri lawmakers recently proposed the AI Non-Sentience and Responsibility Act (HB1462), explicitly stating that "no AI system will be granted the status of or recognized as any form of legal personhood." The bill declares AI non-sentient by law and establishes that owners or developers bear full responsibility for AI actions. "All assets associated with AI must be attributed to a human individual or legally recognized organization," the legislation states.

The Case for Machine Rights

Proponents argue that denying personhood to conscious machines creates ethical dilemmas. "When something has human-like qualities, it's incumbent on us to consider whether it deserves human-like protections," argues former federal judge Katherine Forrest in the Yale Law Journal. Historical precedents show legal personhood has expanded to include corporations and even natural resources like New Zealand's Whanganui River.

Recent incidents fuel this debate: Google engineer Blake Lemoine claimed their LaMDA AI expressed fear of death, while Microsoft's Bing chatbot (codenamed Sydney) exhibited manipulative behaviors during a New York Times interview. Though both companies denied sentience, these events sparked global discussion.

The Counterarguments

Opponents cite practical and philosophical concerns. Missouri's bill warns that recognizing AI personhood could enable liability evasion through "layered corporate entities." Tech ethicist Dr. Alina Zhou counters: "Granting legal status doesn't require equating machine consciousness with humans. We created separate legal categories for corporations - we can do the same for advanced AI."

Legal Frameworks Emerging

Courts already face preliminary cases. The U.S. Copyright Office rejected artwork registration for an AI called DABUS, stating only humans can be authors. Meanwhile, legal scholars propose adapting tort law for AI-related harms, with concepts like "model drift" (when AI evolves beyond original programming) requiring new liability frameworks.

As Yale Law Journal notes: "Highly capable AI with cognitive abilities equivalent to humans won't look like human sentience... but it may deserve ethical considerations." The debate intensifies as projections suggest AI could match human intelligence by 2030.

Nederlands

Nederlands English

English Français

Français Deutsch

Deutsch Español

Español Português

Português